Earlier this year, after attending a Microsoft conference,

I blogged how the momentum of the developer workflow is moving rapidly towards running tasks from the command line. Since then I have continued to develop skills and knowledge in this area.

This post is a walk-through of my current workflow for bootstrapping new Typescript web projects for development using VS Code. The high level tasks I execute are:

- Create a root folder for the application

- Acquire development tools using NPM

- Acquire software framework dependencies using Bower

- Create the VS Code project definition

- Start the application and launch it in a browser

- Add the project assets to source control

Step 1. Create the project Root Folder

A straightforward step. You could open Windows Explorer and browse to your root working location and create a new folder. However, as we are going to be working inside of the command shell, we can avoid the friction of opening Explorer by running DOS commands.

NOTE: Since moving to the command line for my workflow, I have leaned more and more on ConEmu as my tool of choice for running command line tasks as it is only ever a keystroke away.

I launch ConEmu (CTRL+~) and type:

> cd \code

> md myproject

> cd myproject

That gets me a new project folder named myproject in my development working folder and places the location of my command prompt in the new folder.

Step 2. Acquire Development Tools

For the purpose of my bootstrapping I grab the following development tools:

- Typescript: The tsc compiler will compile our Typescript to Javascript

- Bower: package manager for managing client side dependencies such as Bootstrap and Angular

- Browser Sync: Use to serve the app and to provide live updates in the browser during development

Command line tasks for installing development tools using npm:

Running these commands creates a

package.json folder that contains the node configuration information. It also creates a node_modules folder where the packages that get downloaded are stored.

NOTE: Later the node_modules folder is excluded from source control as the packages can be pulled down on demand using the npm install command - typically either on the build server or on another developer machine.

Step 3. Acquire Software Dependency Packages

My standard software frameworks are Bootstrap and Angular so I install them as part of the project setup. As with npm, the first step is to initialize the folder for bower and then run commands to pull down and install the packages:

bower init

bower install angular --save

bower install bootstrap --save

Step 4. Create the VS Code Project

For this step, launch VS Code from the current folder using the following command:

code .

Note: This assumes that you have VS Code installed on your machine and that it is configured on your PATH variable.

In the root of the project, add a file named

app.cmd and add the following command:

browser-sync start --server --port 3001 --index default.html --files="./*"

This command launches the app using a web server.

Browser Sync is a node package that was installed in the tooling step. It watches files for changes and then refreshes the browser to show the updates.

Update the package.json file by configuring the start command to launch the website using the command that was just created.

With this piece of config in place, the site can now be launched in a browser from the command line using either of the following commands:

# launch using npm

> npm start

# launch using app.cmd

> app

NOTE: All of these tasks can be automated using VS Code's task runner but I am not yet as familiar with that task runner as I am with the approach shown in this article.

Typescript projects require a

tsconfig file that defines compiler settings and identifies the Typescript files to be compiled. Create a file named tsconfig.json in the root of the folder and add the following configuration information.

As the project is developed, files get added to the files element and further compilation options added as necessary.

The project will need a suitable default html file and this is the basic template that I have been using.

The last task for setting up our VS Code project is to add a build task. This allows us to press CTRL+SHIFT+B to compile the project. To create the initial tasks file, press CTRL+SHIFT+B and VS Code will prompt to create the file:

After creating the task runner, overwrite the default content following task definition to compile Typescript assets:

At this point you should be able to press CTRL+SHIFT+B and see that the project builds - later when you have Typescript files, you will see that they get built into the js folder which is what we configured in the compilation options earlier.

You should also be able to run npm start and see the default page load up in a browser.

If that has worked so far... well done!

Step 6. Add assets to source control

With all of that hard work, the last thing you want is to lose content that has been created. The final step is to configure Git and commit the project to source control. To start with, create a Git ignore file to exclude Typescript generated content, and packages.

The file should be named .gitignore and contain the following definition.

NOTE: Read Scott Hanselman's article to learn how to create files that start with a dot in Windows.

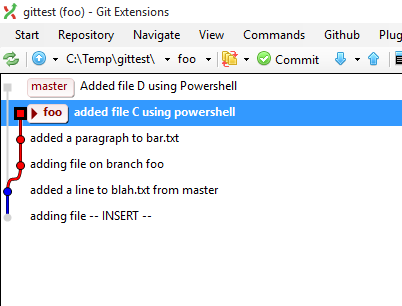

With the .gitignore configuration in place, all that is left is to run Git commands to initialise the repository and commit the assets to version control:

> git init

> git add .

> git commit -m "Initial Commit"